Blog

Use HostingEnvironment.MapPath

When running a service under IIS, the HttpContext.Current object is not available, so HttpContext.Current.Server.MapPath will fail.

fileName = HttpContext.Current.Server.MapPath(fileName);The solution is to use Hosting.HostingEnvironment.MapPath instead.

fileName = System.Web.Hosting.HostingEnvironment.MapPath(fileName);Related Items

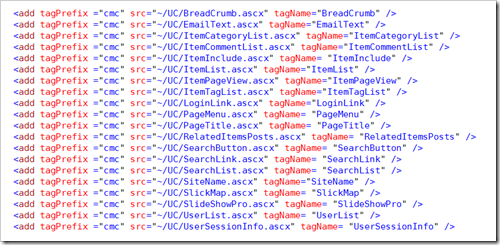

Custom Controls In ASP.NET

ASP.net has that neat little feature where all the controls can start with <asp:. It makes it easy to code. I had numerous custom controls and I always had to register the control on each page to use it. Scott Guthrie had a great tip on how to register the controls in the web.config file so that it doesn’t have to be done in each page.

I’m really surprised I haven’t read about this earlier.

References

Related Items

Windows Live Writer XMLRPC and BlogEngine.Net

BlogEngine.Net supports XMLRPC and the MetaWeblog API. This allows it to support other tools like Windows Live Writer. But where are the specifications for the API and WLW found? I came across some interesting related links along the way in trying to figure out how to add proper slug support for pages in BlogEngine.Net.

WordPress outlines the definitive guide to the WordPress API at http://codex.wordpress.org/XML-RPC_wp. This includes:

- wp.getTags

- wp.getPage

- wp.getPages

- wp.newPage

- wp.deletePage

- wp.editPage

- wp.getCategories

- wp.getAuthors

Several other API’s are listed on the site. The reference is the only tool I’ve found that outlines the structures used for XMLRPC for the MetaWeblog API.

Windows Live Writer outlines the list of all the options, capabilities supported between the MetaWeblog, MoveableType, and Wordpress systems in their article Defining Weblog Capabilities with the Options Element.

In BlogEngine.Net the Windows Live Writer manifest is defined in the file wlwmanifest.xml. This can be extended to support some of the additional options supported by WLW. However, additional code is required in the BlogEngine.Net XMLPCRequest, XMLRPCResponse and MetaWeblog files to support these features.

One of the interesting design decisions about BlogEngine.Net is that it supports XML files and uses a Guid as the ID for all posts and pages. The MetaWeblog API wants to use an int for the ID. This causes some unexpected results when selecting a parent, or the author in page editing mode with WLW. This is just a design tradeoff of not using a database (sequential ID’s are difficult and cumbersome to generate without a database).

Interestingly enough, there is an unusual nuance either in the API or WLW, in that in order to get a hierarchical indented list in the dropdown list of pages, XMLRPCResponse.WriteShortPages needs to set the wp_page_parent_id to the “title” and the page_parent_id to the “guid” of the page. It seems a bit backwards to me.

I’ve extended the MetaWeblog API in BlogEngine.Net to support slugs properly. The trick was using value wp_slug in the XML for the same as the post code does.

Code added from XMLRPCResponse.WritePage and WritePages. The MWAPage class must also add the slug properly to support this.

// wp_slug

data.WriteStartElement("member");

data.WriteElementString("name", "wp_slug");

data.WriteStartElement("value");

data.WriteElementString("string", _page.slug);

data.WriteEndElement();

data.WriteEndElement();

I’ve published the changes to the MetaWeblog API but keep in mind these changes go way beyond just this change and break compatibility with BlogEngine.Net.

Download: MetaWebLog.zip (15.98 kb). Includes XMLRPCRequest, XMLRPCResponse, and MetaWeblog changes.

References

Related Items

Exchange Hosting

Is Software As a Service hype or real. Microsoft Exchange Server Hosting is not only real, but extremely cost effective.

I’ve worked with organizations with anywhere from 25 to 10,000 people using Microsoft Exchange. All of them having to deal with users in multiple countries and locations. It used to be simple to host an Exchange server. If you had a spare server, just install it, do a few simple configurations, and you were done. Reboot it once year just for good measure.

Then SPAM started flooding the servers, so we put SPAM filters on. Then more SPAM, more and more filtering software. Then patches and hot fixes, monthly, then weekly. Then new government regulations on email, data retention and archiving came into force. Then Microsoft decided you couldn’t run Exchange Server on a domain controller. That was when I decided the cost of hosting an Exchange Server for a small organization was too much. I looked for some alternatives.

POP / SMTP mail is cheap, but doesn’t provide the synchronization (on the web, on the laptop, on the desktop), or calendar coordination between teams that Exchange does.

With hosted Exchange Services running $9-$14 USD per use per month for 2 – 3 GB of storage per mailbox, it was a no brainer. You can figure the total cost of ownership for hardware + maintenance + redundancy costs and it doesn’t take long to see the cost savings for a hosted service. I came up with for cost comparisons based on my experiences in using Exchange. This isn’t some fancy ROI calculator, and of course your mileage may vary. I factored in the total cost of all administration time for all maintenance on Exchange servers, in addition to the hardware and licensing costs.

- Less than 60 users, go with hosted Exchange services. When you add the administrator maintenance costs of SPAM filtering software, Exchange, and anti-virus software, hosted exchange is, always cheaper.

- More than 60, less than 100 users, think about hosted Exchange services. If all users are in one location, it can make sense to go with your own Exchange Server. If users are distributed geographically, that means multiple exchange servers / VPN’s, and more maintenance and hardware, all driving up the cost and thus making Hosted Exchange Servers look more attractive.

- More than 100 users, host your own Exchange Servers.

If a company buys it’s own hardware, it must depreciate it over time. As an added benefit, with a hosted service there is no initial capital outlay, only a per month charge. As the number of users increases or decreases, the cost goes up or down accordingly.

The hosted Exchange services provide Secure Transports (Https, RPC over Https) so email is secure (as it can be). Hosted Exchange Providers also provide data retention services (usually at some incremental cost) that conform to federal regulations. If your a small company, do you have the expertise to cover all the following regulations? SEC Rule 17a-4; NASD Rules 3010, 3013 & 3110; NYSE Rules 342, 440 & 472; RIA SEC Rules 204-2 & 206(4)-7; IDA Bylaw 29.7; FSA, Sarbanes-Oxley Act; HIPAA; GLBA; PIPA & PIPEDA; Patriot Act; Data Protection Act, Basel II, FERC; FCC and more. Most hosting Exchange service providers can support those regulations.

In our organizations the best plans we had were four hour, standard business hours recovery times. This meant that if the main server on the east cost failed after hours someone on the other side of the world would have to wait a day before they could get back to using email. With hosted Exchange services, we get 24/7 availability. There are some down times for server maintenance, but it’s scheduled and notified in advance (usually at 11:00 PM EST, so no one has complained yet).

I’ve use Sherweb hosted Exchange services since 2008. I’ve been extremely happy with the service.

What’s the one downside to hosted Exchange Services. There are additional charges per month for email forwarding to an external address. Also additional charges per month for each SMTP user account ($1 / mo) add up. There are also additional charges per month for each “resource” mailbox used for scheduling (like a conference room). And, there are additional charges for Blackberry users. All these need to be taken into account when planning the budget for using a Hosted Exchange Service, but they are often still significantly cheaper than hosting Exchange on your own.

Ballpark costs used in estimating: $2000 server depreciated over 4 years. $1600 in OS and Exchange software. $2500 for installation, setup, and configuration (one time). $5,500 per year for Exchange / Windows server maintenance, updates, backups, monitoring, and support.

References

Related Items

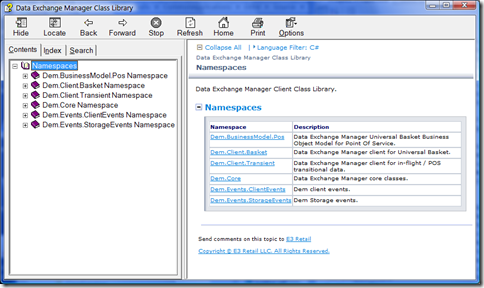

MSDN Style Class Documentation

NDoc is dead. Long live NDoc! How to generate MSDN style documentation using Sand Castle on Visual Studio 2008.

History

In the mid 90’s when COM was king, generating API documentation for classes was a tedious and arduous task. The moment the documentation was published, it was often out of date due to changes the development team had already made. One company I worked for in the retail software domain generated an entire toolset (OO TOOL) to diagram, generate code, generate a data model and generate documentation from a single source.

In the mid 90’s when COM was king, generating API documentation for classes was a tedious and arduous task. The moment the documentation was published, it was often out of date due to changes the development team had already made. One company I worked for in the retail software domain generated an entire toolset (OO TOOL) to diagram, generate code, generate a data model and generate documentation from a single source.

An interesting side note, one of the key members on the OO TOOL team was Mark Collins. Mark worked with me in the UK for two years at Fujitsu / ICL and went on to write several articles and even a book on Windows Workflow. The tool was the first successful attempt I had seen in a Microsoft based project to keep source and documentation updated using automated tools.

When .Net entered the picture, Visual Studio provided an interesting feature to allow code comments in C++/C# to be extracted to an XML file. Not to be out done, VB Commenter came out for VB code and VB programmers to do the same. With code generating XML style documentation, the next step was to convert the documentation to something a little more searchable and user friendly. NDoc came out and was an instant hit. NDoc when combined with the HTML help generator was able to generate compiled HTML output. While a bit of work, it was infinitely better then older proprietary tools and definitely more intuitive than Sand Castle.

When .Net 2 came about, it changed some things that required NDoc to make some fairly non trivial changes. Kevin Downs one of the original NDoc authors decided that amidst personal threats and the additional non paying effort required to go to .Net 2, he decided to discontinue his efforts on NDoc. Compounded by an announcement by Microsoft to create a competing product called Sand Castle, NDoc subsequently died.

Code Is The Documentation

If Sand Castle wounded NDoc then IntelliSense killed and buried it. The MSDN style of documentation is limited in it’s usefulness. It’s too focused on a specific method / property to be helpful, difficult to deploy (even when done as a website) and becomes out of date extremely quickly. When Visual Studio added IntelliSense in 1996, it really changed the way developers used documentation. Books, manuals, API documentation all were used at the time. IntelliSense allowed embedding into a .Net dll all the API information needed in the past and .Net reflection combined with IntelliSense could pull that documentation out and put it directly in the hands of developers.

Show Me The Code

I suspect IntelliSense and the Go To Definition feature of Visual Studio get used more than the MSDN online style documentation. Between the two features, a quick reference to a dll and an appropriate using statement, gives 80% of the information needed to use a class library. Similar in concept to the man page, the IntelliSense documentation provides the documentation you need, when you need it and where you need it.

IntelliSense provides the API documentation like a dictionary provides the list of words for a language. However, it doesn’t tell you how to combined the information together to achieve a specific purpose. The documentation for fopen(), never told you about fflush(). You had to know this or read it in a book or learn it from someone who knew it. Blogs with examples have filled the knowledge gap and when that isn’t enough you can search Stack Overflow for expert help on answers. When IntelliSense documentation is combined with an example or two, we have just about everything needed for the typical class library programmer.

A New Programming Documentation Era

It was subtle at first, but we have transitioned into a new era of programming. With the rapid changes in technology, came a rapid way of keeping the technology up to date. Generated static printed documents no long work in an era when technology changes many times a year.

- API documents were replaced by IntelliSense and Go To Definition

- Designs can be documented by stub code and XML comments quicker than they can with traditional Word documents.

- Books were replaced by Blogs and search engines

- Code examples were added to Blogs to explain “How to” use the technology

- Code formatters and Syntax Highlighters were added to Blogs to put a more coding friendly face on examples

- Questions that were answered by support forums are now answered on Stack Overflow with much better answers than the “support staff” ever were able to provide.

Give Me That Old Style MSDN Documentation

With all the new advancements in technology, MSDN style documentation can still be useful about 5% of the time to pull an overall view of documentation together or to provide documentation in a form for someone who doesn’t have a dll, but needs to see what a class library can do. Also, sometimes you do get paid by the pound for generating documentation. Then there are those RFP’s that actually want to see a printed form of the documentation that won’t be used by any programmers, but does prove that you have enough staff to generate documentation worthy of purchasing the product.

If you want to pull all the IntelliSense information out into HTML or compiled Html Help, your going to need some help. Microsoft has Sand Castle to build those documents.

How To Use Sand Castle

The first thing to know about Sand Castle is it’s not enough to do the job. You need some tools to help Sand Castle generate the help documentation.

The key steps I used to generate documentation with Sand Castle:

- Mark all public classes with XML comments, i.e. ///<summary>Describe the class.</summary>

- Download and Install Sand Castle via the MSI.

- Download and Install the Sand Castle Help File Builder (SHFB) MSI by Eric Woodruff

- Download and Install / Patch the Sand Castle Styles

- Run SHFB

- Add documentation sources (csproj or dll)

- Add references (csproj or dll)

- Set the help file format and other SHFB options (I recommend using the MemberName naming method to get html links with names instead of Guids)

- Run SHFB build, wait, wait, done

Sand Castle documentation for actually using the product is hard to come by. I did find the Sand Castle GUI tool (located at C:\Program Files\Sandcastle\Examples\generic after installing the MSI). This tool was a little useful in selecting the dll’s and building output, but the SHFB tool is much more user friendly.

Sand Castle won’t fix the out of date documentation problem, but when combined with SHFB it does help when you need to generate something a little more archaic solid than IntelliSense documentation.

Example

Below is an example of compiled help style documentation generated using Sand Castle and SHFB and hana presentation style.

References

- Codeplex Sand Castle Help File Builder

- NDoc 2 Is Officially Dead

- Sand Castle CTP And The Death Of NDoc

- NDoc Wikipedia Entry

- Ndoc at Sourceforge

- Comparison of documentation generators

- VB Commenter

- Beginning WF Windows Workflow .NET by Mark Collins

- Beginning WF: Windows Workflow in .NET 4.0 at Apress

- Share This Point

- IntelliSense

- Syntax Highlighter

- MSDN online

- Sand Castle Blog

- SandCastle Builder at Code Project

- C# XML Comment Documentation

- SHFB Installation Instructions

- DocProject for Sandcastle

- Doxygen

- XML Comments Let You Build Documentation Directly From Your Visual Studio .NET Source Files (on MSDN Magazine)

- C# Documenting and Commenting (on CodeProject)

- C# and XML Source Code Documentation (on CodeProject)

- C# Tutorial Lesson 19: Code Documentation

Related Items

Field Codes

Using field codes to replace values in content, templates, and reports allows users and designers a great deal of flexibility for creating and managing content and templates without requiring code changes.

This article is a summary of how to use field codes in content, templates, or reports and how to convert them to object property values and format and replace them when displayed or rendered. This is based on a similar pattern that Microsoft Word uses when formatting fields. It’s also commonly used in WordPress and WordPress themes.

Field Code Syntax

The most important aspect of the design is picking a syntax that can be easily identified within the content and doesn’t conflict with true content. Braces { } identify the field marker within the content and are easy to parse. The symbols $, %, < >, ( ) or [ ] could be used for this pattern as well.

The field marker contains two comma separated values, the named type separated by one or more periods and the format string. Quotes around each token make it easier to identify the tokens within the field marker and to not mistake the end of the format string for the end of the field marker.

{“object.expression” [,“format”] }

The brackets denote the format string is optional.

- Field marker: {“object”, “expression”, “format”}

- Object: A valid object name within the context used for binding

- Expression: “property” – must be a valid property expression

- Format string (C# string.format) “{0:yyy-mm-dd}” – optional

Depending on the application needs, a default type can be used and only the properties can be used for the type token, or multiple types can be used.

ASP.Net 2.0 uses a similar pattern for formatting data binding:

<%# Eval("expression"[, "format"]) %>

<%# Eval("Price", "{0:C}") %>

<%# Eval("Price", "Special Offer {0:C} for Today Only!") %>

Field Code Examples

- Summary: {"Summary"}

- Keywords: {“Item.Keywords"}

- Link: {“Item.Link"}

- Create Time: {“Item.CreateTime", "{0:yyyy-MM-dd HH:mm tt (zzz)}"}

- Create TimeUtc: {“Item.CreateTimeUtc", "{0:yyyy-MM-dd HH:mm tt}"}

- ViewCount: {“Item.ViewCount", "{0:###,###.##}"}

Matching Regular Expressions

Two regular expressions are used to parse the content or template. The first one to get the entire field marker (FieldRegex), the second one to parse individual tokens within the field type (TokenRegex: “type.property”).

The following example parses through the Body string and replaces the field marker with the appropriate formatted string.

private static readonly Regex FieldRegex = new Regex(@"\{\"".*?\""\}", RegexOptions.IgnoreCase);

private static readonly Regex TokenRegex = new Regex(@"\"".*?\""", RegexOptions.IgnoreCase);

private static readonly char[] charTrimSeparators = new char[] { '"', ' ' };

private static readonly char[] charKeySeparators = new char[] { '.', ' ' };

MatchCollection myMatches = FieldRegex.Matches(Body);

foreach (Match myMatch in myMatches)

{

string matchFieldSet = myMatch.ToString();

MatchCollection tokenMatches = TokenRegex.Matches(matchFieldSet);

string keyAll = tokenMatches[0].ToString().Trim(charTrimSeparators);

object obj = GetObj(item, keyAll);

string value = String.Empty;

if (obj != null)

{

switch (tokenMatches.Count)

{

case 2:

string format = tokenMatches[1].ToString().Trim(charTrimSeparators);

value = String.Format(format, obj);

break;

case 1:

value = obj.ToString();

break;

}

}

if (!String.IsNullOrEmpty(value))

{

Body = Body.Replace(matchFieldSet, value);

}

}

Using Reflection To Get Types And Properties

The tricky part is to use reflection to get the property in the field marker and find it in the object.

using System.Reflection;

private object GetObj(Sometype item, string keyAll)

{

object obj = null;

Type type = null;

PropertyInfo property = null;

string[] keyTokens = keyAll.Split(charKeySeparators, StringSplitOptions.RemoveEmptyEntries);

switch (keyTokens.Length)

{

case 1:

key = keyTokens[0];

parent = "Sometype";

break;

default:

key = keyTokens[1];

break;

}

type = post.GetType();

property = type.GetProperty(key);

if (property != null)

{

obj = property.GetValue(item, null);

}

return obj;

}

This reflection pattern works good for “Object.Property”. However, it becomes significantly more difficult for multiple levels of objects, i.e. “Object.Object.Object.Property”. If anyone has suggestions on how to resolve that without hand parsing each object, please let me know.

Performance Implications

Using reflection and the String.Replace methods can add significant processing time if there are numerous values to find and replace. Building a dictionary of the names / values that are found would in theory be faster than using reflection, but I’ve not measured this direct comparison. I have seen the reflection using about 10 ms (on a core 2 duo 2.5 Ghz) to retrieve and replace one property.

If a Dictionary is used, then converting the content to a StringBuilder and using StringBuilder.Replace instead of String.Replace should also improve performance.

Once the content is parsed and the field tokens are replaced, caching the replaced content is definitely recommended where possible.

References

- .Net Custom Numeric Format Strings

- .Net Custom DateTime Format Strings

- Regular Expression Based Token Replacement in ASP.NET

- Token Replacement in ASP.NET

- Template Tag Shortcodes: WordPress Plugin

- Vine Type Reference Variables

- WordPress Template Tags

- WordPress Theme Tags

- Simplified and Extended Data Binding Syntax in ASP.NET 2.0

Related Items

Features, Flags, Updates And Branches

Version control systems are wonderful tools and terrible tools all depending on the process used to manage the files stored in them. They allow tracking changes, updates, reversing updates, comparing to older updates, and creating multiple branches and variations of files ad-infinitum. What are good rules to keep good tools from going bad?

Rule #1 Don’t Break The Base

Don’t check code in that breaks the trunk code base.

Organizations generally follow two philosophies for code check-ins: Check-in daily under penalty; or Don’t break the base.

Check-in daily under penalty as a philosophy is problematic because developers are forced to check code in that may or may not compile. Organizations use this as a backup mechanism, but it’s a poor solution for that approach.

Don’t break the base as a philosophy can cause headaches because a developer may go long periods of time (days/weeks) with multiple code changes and then they loose the benefits of rolling back stuff to a level of code that was checked in. This weakness can be resolved by adding a scratch area in a source control system that developers can use daily / hourly that is not part of the main code base structure. Once developers are confident it won’t break the base, they can check in to the main trunk without causing others problems. I don’t like two places for the same thing, but in practice, the scratch area works well and is often used sparingly. Labels and branches are not good for scratch work.

The most common break the base problem isn’t really code that doesn’t compile, it’s missing files. Files that were on the developers machine, but weren’t checked in. Having a tool like Sourcegear Vault that shows files on the disk that haven’t been added make this problem much less likely to occur. Before my teams used Sourcegear, this problem happened a lot. It still happens (even to me), but much less frequently than in the past.

Rule #2 Label Your Releases

Label all releases (both internal and external).

Labels are like breadcrumbs. They can be applied to a common root directory and all subsequent child folders and files get the same label. Adding labels when releases are made allow you to go back and cut a branch from a label later if needed, or to pull the source for that particular label.

I like labels in the form “Rel 2.0.3.0 2009 12 03”. This includes the release number, and the date the release was made. Adding the date might be redundant because most source control systems keep the date a label is made, but not all tools show the date unless you ask them to. Making it part of the label makes it easy to find two pieces of information in one quick scan.

In some projects I’ve even used the only build from a label approach. While extremely rigid, it works when teams are franticly checking in code, and a build machine is used to specifically create daily builds. If it’s a manual process, this rarely works reliably. If the label / get / build process is automated, then it has better success.

Rule #3 Prune Your Branches

Anytime a branch is created, it should be pruned (after 30,60,90,180 days) and folded back into the trunk.

Source control branches give the illusion of control. They are generally good for two things:

- Marking a point in the code for generating an emergency hot fix for a customer.

- Marking a point in time for a base to be used when migrating to another platform or architecture.

Branches are problematic because they create multiple places for people to put things (and subsequently get lost) and they also create multiple places for people to get things (and subsequently inadvertently get the wrong version that was desired). I’ve learned to try to avoid branches if at all possible. Multiple source control branches add expense and time, but don’t add a lot of value.

Branches are complex for source control software to implement. They often have issues (not so much as software issues, but issues with documentation on how to make the source control system achieve the behavior you want). If your running SourceSafe and try branches, you are sadistic. Upgrade to a real source control system like Sourcegear Vault or PVCS (Dimensions, Merrant, Intersolve, Serena or the current PVCS product name of the day). I’ve reviewed CVS and Subversion, but the architecture and features of the rich clients for Sourcegear Vault wins hands down despite having to pay a license for each user to use them (although Sourcegear is free for one user).

In my experience, Sourcegear Vault is the only tool that has an architecture that allows smart clients and a central web service that works well over the internet for multiple teams in multiple locations. All other solutions I’ve evaluated including Microsoft Visual Studio Team Foundation, require significant investment in hardware and only work well over a LAN. These solutions were designed with thick client / server solutions for LANS, not WANS or the Internet. I have teams in India, California, Oklahoma, and Raleigh that have used a common Vault solution for over five years and it’s worked flawlessly along side Visual Studio (2003, 2005, 2008, 2010).

Rule #4 Properly Dispose Of Flags

Anytime a code feature flag is created, it should be reviewed to see if it’s still applicable (after 90, 180, 360 days). Flags that are no longer applicable should be removed to simplify the code base.

Just like taking care of national or state flags with flag etiquette or the flag code code flags should be evaluated, cleaned up and removed when they aren’t needed anymore. A code base will be encumbered by the weight of too many flags.

The Flickr developer blog posted a long running practice of mine and common technique at Flipping Out on the topic of code flags.

if (flag)

{

// New Feature

}

else

{

// Existing Behavior

}

The downside of this approach is that if flags are never removed, it becomes increasingly difficult or impossible to add new features in sections of conditional code. It can also be tedious to add the flags and the code, but if it’s practiced, this problem is usually overcome quickly, becoming second nature. The upside of this approach is it helps significantly with Rule #1 Don’t Break The Base. Just make sure the default is set so the flag is off unless specifically turned on.

For the OCD flagger, having flags at the system level, per account instance, or per user level give ample opportunity for this approach. Perhaps best used sparingly, but code flags are best used when needed and are simpler than branches to deal with.